I am currently a researcher at Shanghai AI Labotory. My research interests include Embodied AI, Computer Vision, Robotic Manipulation and Autonomous Driving.

I received my PhD degree from Robotics Institute, Shanghai Jiao Tong University, supervised by Prof. Honghai Liu, and obtained my bachelor’s degree from Central South University. I am currently working with Jiangmiao Pang on Embodied AI. Over the preceding period, I have worked with Prof. Hongyang Li and Prof. Yu Qiao at Shanghai AI Labotory.

I am an interdisciplinary lifelong learner, with an academic journey that has evolved from bio-mechatronics, computer vision, and autonomous driving to embodied AI. I am currently dedicated to advancing Embodied AGI, with an emphasis on generalizable robotic manipulation.

📝 Selected Publications

🤖 Embodied AI * indicates equal contribution

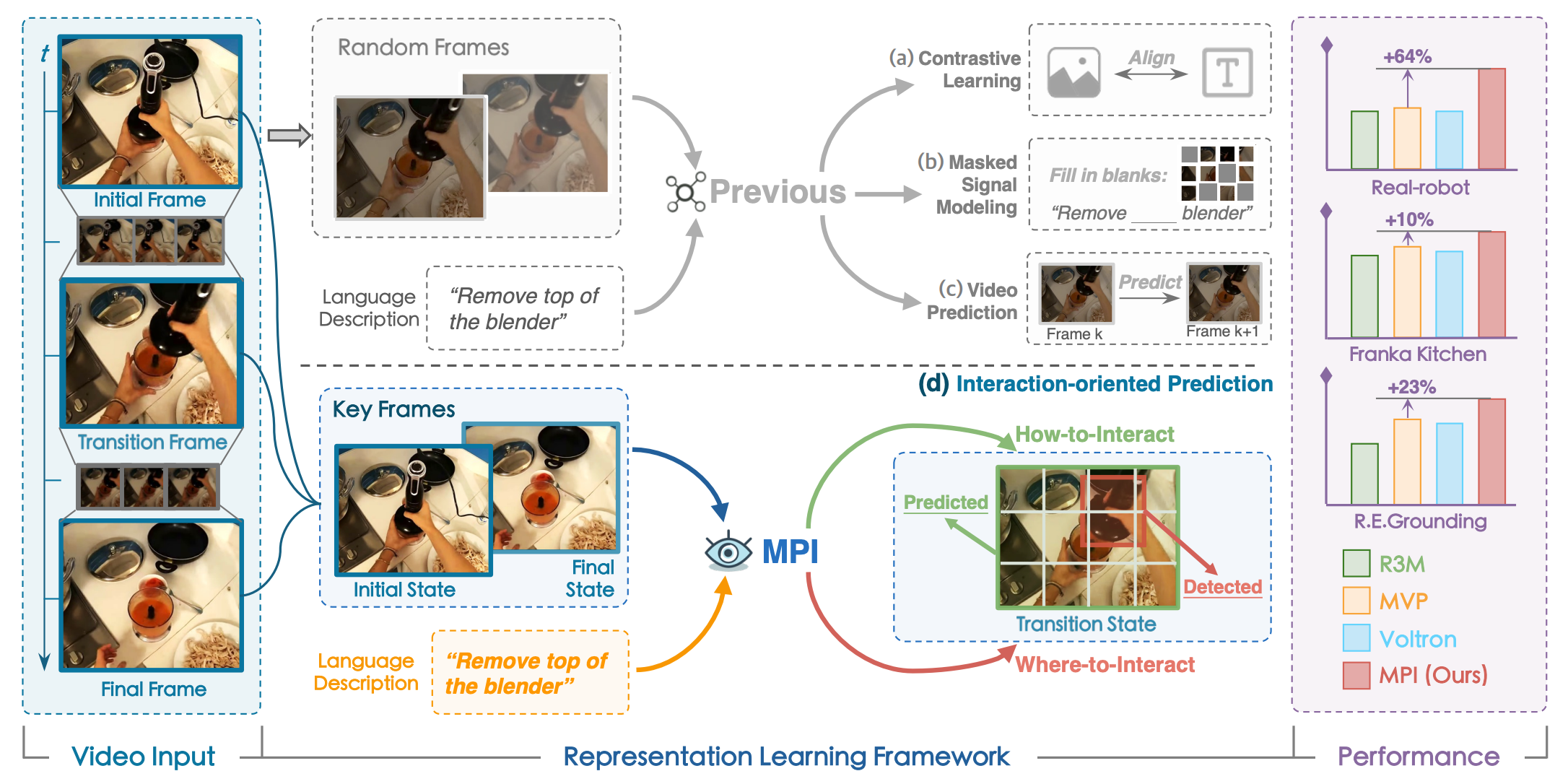

Learning Manipulation by Predicting Interaction

Jia Zeng$^\ast$, Qingwen Bu$^\ast$, Bangjun Wang$^\ast$, Wenke Xia$^\ast$, Li Chen, Hao Dong, H.Song, D.Wang, D.Hu, P.Luo, H.Cui, B.Zhao, X.Li, Y.Qiao, Hongyang Li

- We propose a representation learning framework towards robotic manipulation that learns Manipulation by Predicting Interaction (MPI).

- RSS 2024 | Project Page | Code

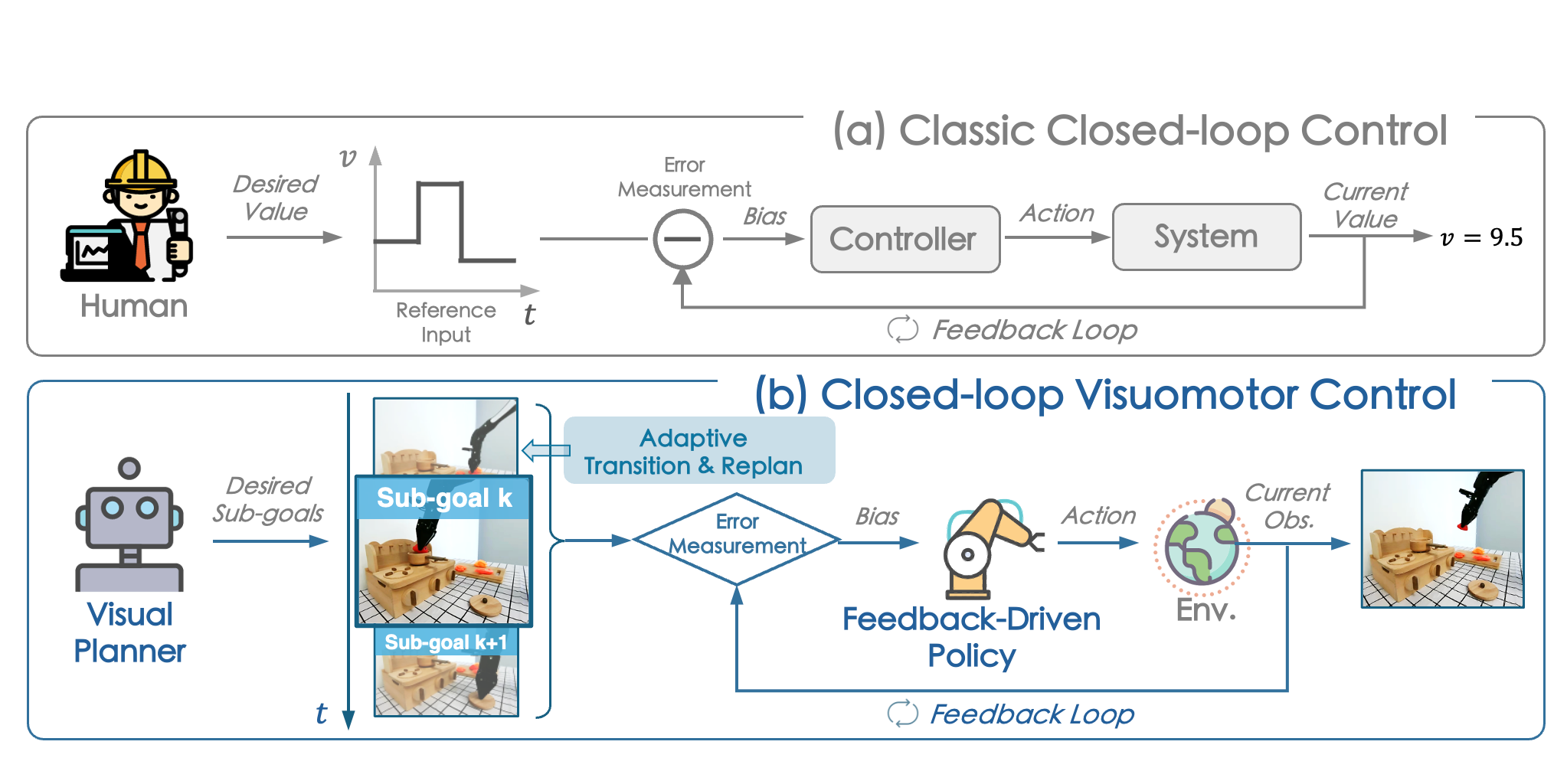

Closed-Loop Visuomotor Control with Generative Expectation for Robotic Manipulation

Qingwen Bu$^\ast$, Jia Zeng$^\ast$, Chen Li$^\ast$, Yanchao Yang, Guyue Zhou, Junchi Yan, Ping Luo, Heming Cui, D.Hu, Yi Ma, Hongyang Li

- We propose CLOVER, which employs a text-conditioned video diffusion model for generating visual plans as reference inputs, then leverages these sub-goals to guide the feedback-driven policy to generate actions with an error measurement strategy.

- NeurIPS 2024 | Code

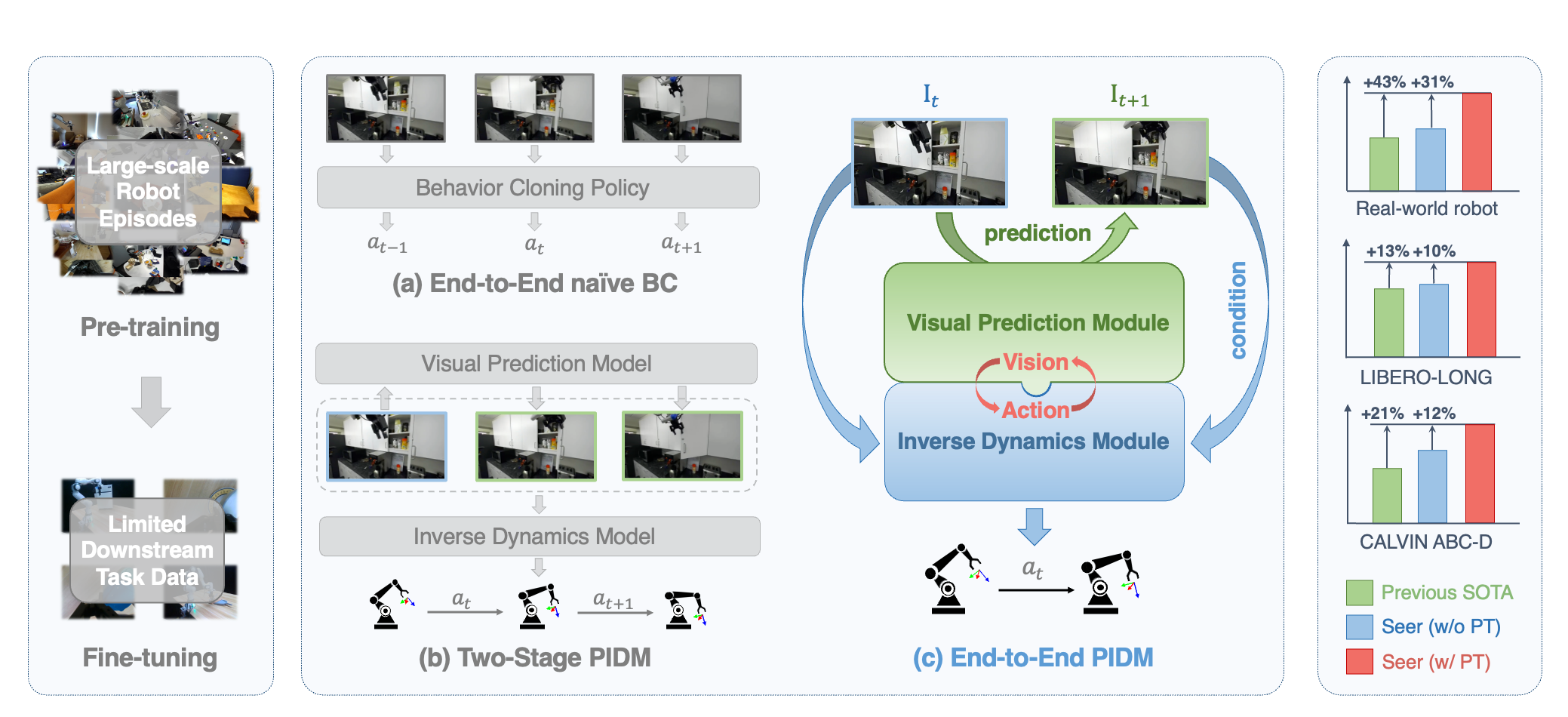

Predictive Inverse Dynamics Models are Scalable Learners for Robotic Manipulation

Yang Tian$^\ast$, Sizhe Yang$^\ast$, Jia Zeng, Ping Wang, Dahua Lin, Hao Dong, Jiangmiao Pang

-

We propose an end-to-end model, Seer, which employs an inverse dynamics model based on the robot’s predicted visual states to forecast actions. We term such architectural framework the Predictive Inverse Dynamics Model (PIDM).

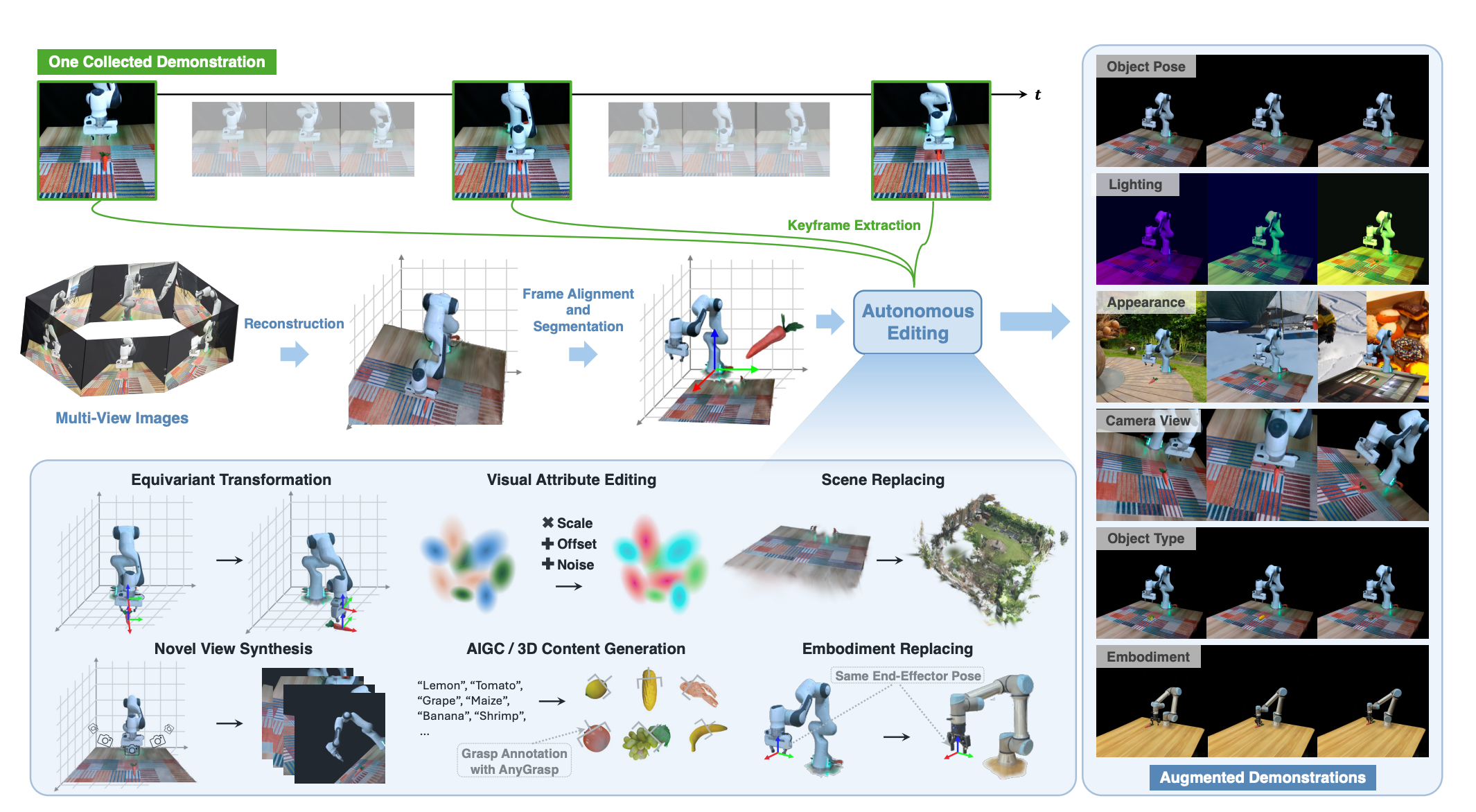

Novel Demonstration Generation with Gaussian Splatting Enables Robust One-Shot Manipulation

Sizhe Yang$^\ast$, Wenye Yu$^\ast$, Jia Zeng, Jun Lv, Kerui Ren, Cewu Lu, Dahua Lin, Jiangmiao Pang

-

RoboSplat is framework that leverages 3D Gaussian Splatting (3DGS) to generate novel demonstrations for RGB-based policy learning. RoboSplat can generate diverse and visually realistic data across six types of generalization (object poses, object types, camera views, scene appearance, lighting conditions, and embodiments).

-

RSS 2025 | Project Page | Code

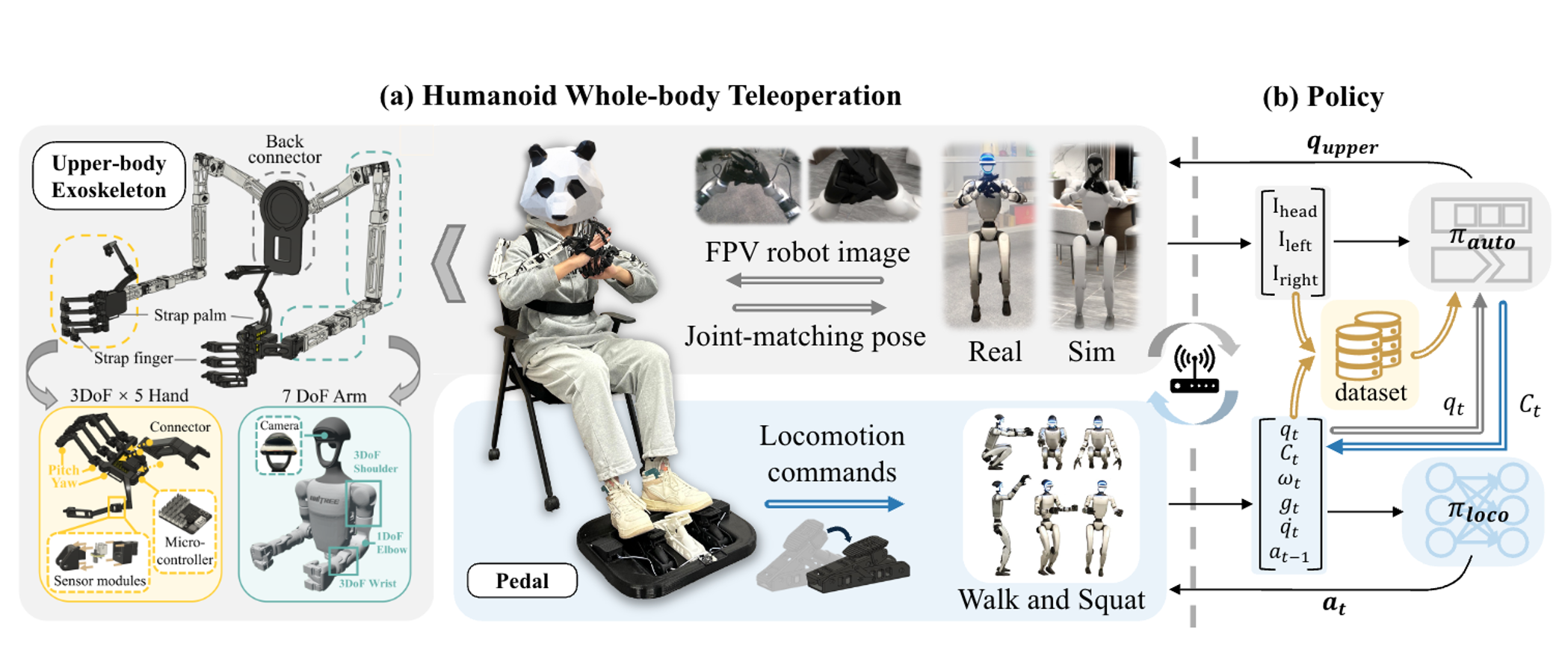

HOMIE: Humanoid Loco-Manipulation with Isomorphic Exoskeleton Cockpit

Qingwei Ben$^\ast$, Feiyu Jia$^\ast$, Jia Zeng, Junting Dong, Dahua Lin, Jiangmiao Pang

-

we propose HOMIE, a novel humanoid teleoperation cockpit integrates a humanoid loco-manipulation policy and a low-cost exoskeleton-based hardware system.

-

RSS 2025 | Project Page | Code

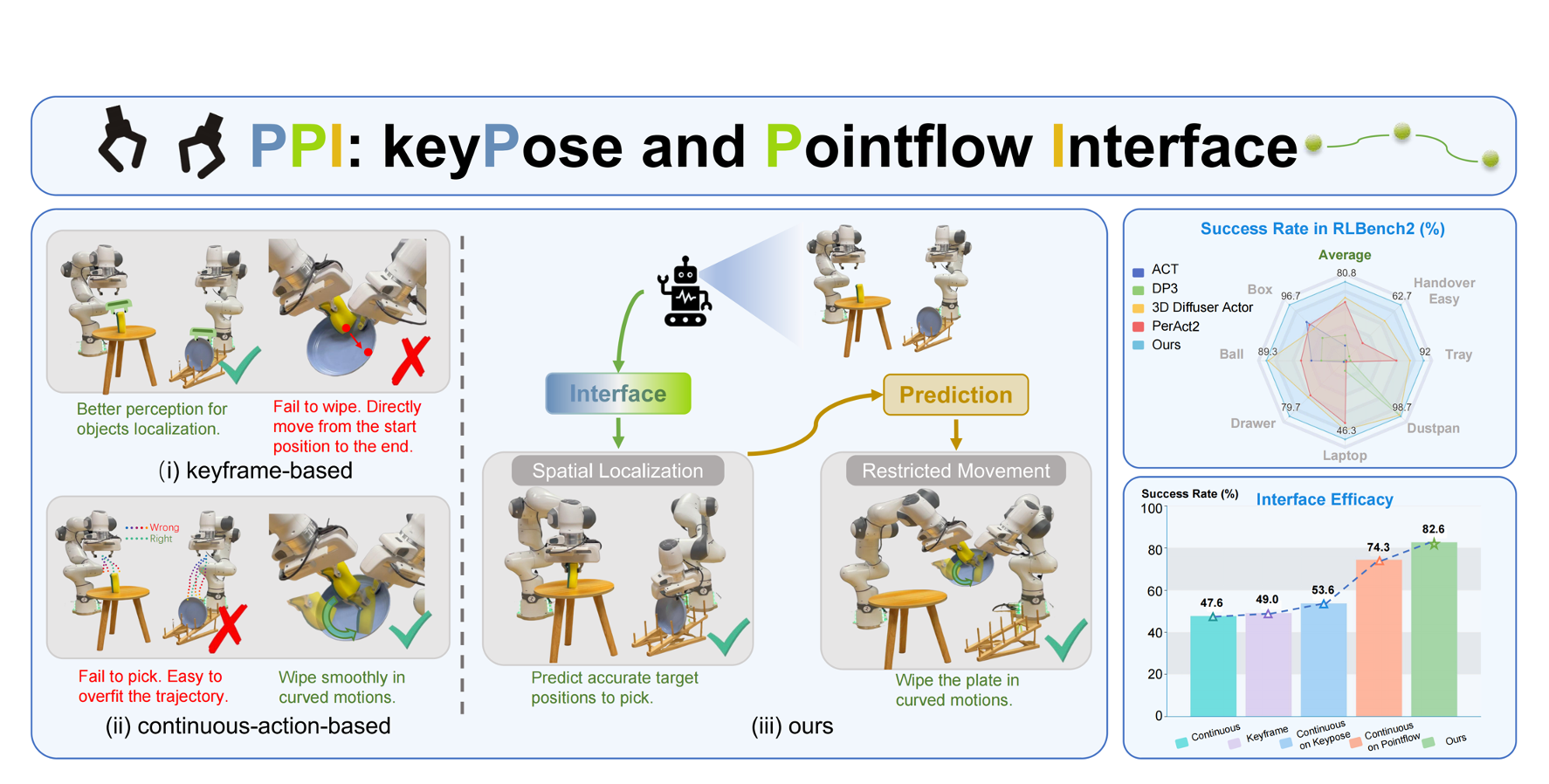

Gripper Keypose and Object Pointflow as Interfaces for Bimanual Robotic Manipulation

Yuyin Yang$^\ast$, Zetao Cai$^\ast$, Yang Tian, Jia Zeng, Jiangmiao Pang

-

PPI integrates the prediction of target gripper poses and object pointflow with the continuous actions estimation, providing enhanced spatial localization and satisfying flexibility in handling movement restrictions.

-

RSS 2025 | Project Page | Code

🚗 Autonomous Driving

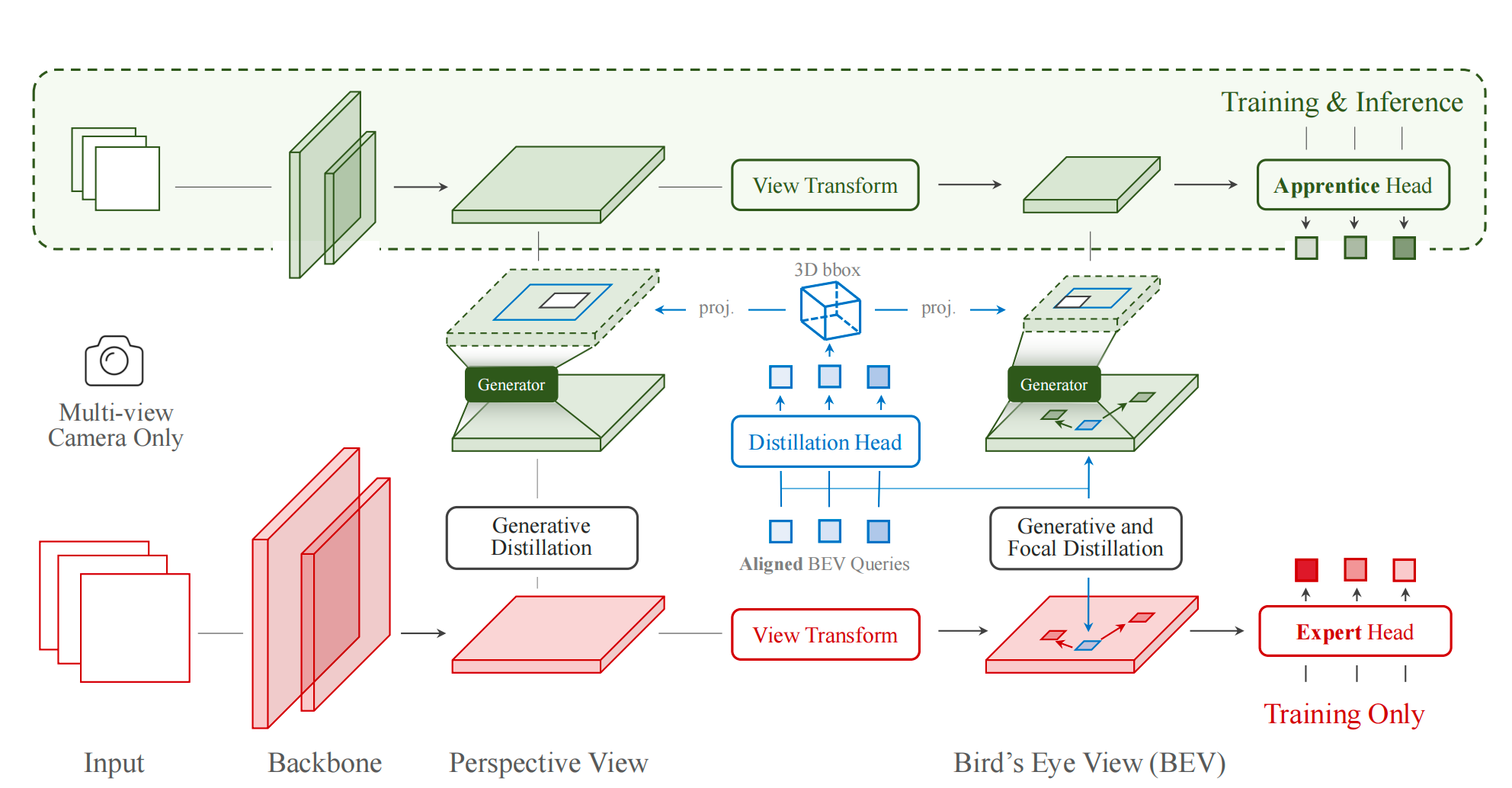

Distilling Focal Knowledge From Imperfect Expert for 3D Object Detection

Jia Zeng, Li Chen, Hanming Deng, Lewei Lu, Junchi Yan, Yu Qiao, Hongyang Li

- We apply knowledge distillation to camera-only 3D object detection, investigate how to distill focal knowledge when confronted with an imperfect 3D object detector teacher.

- CVPR 2023

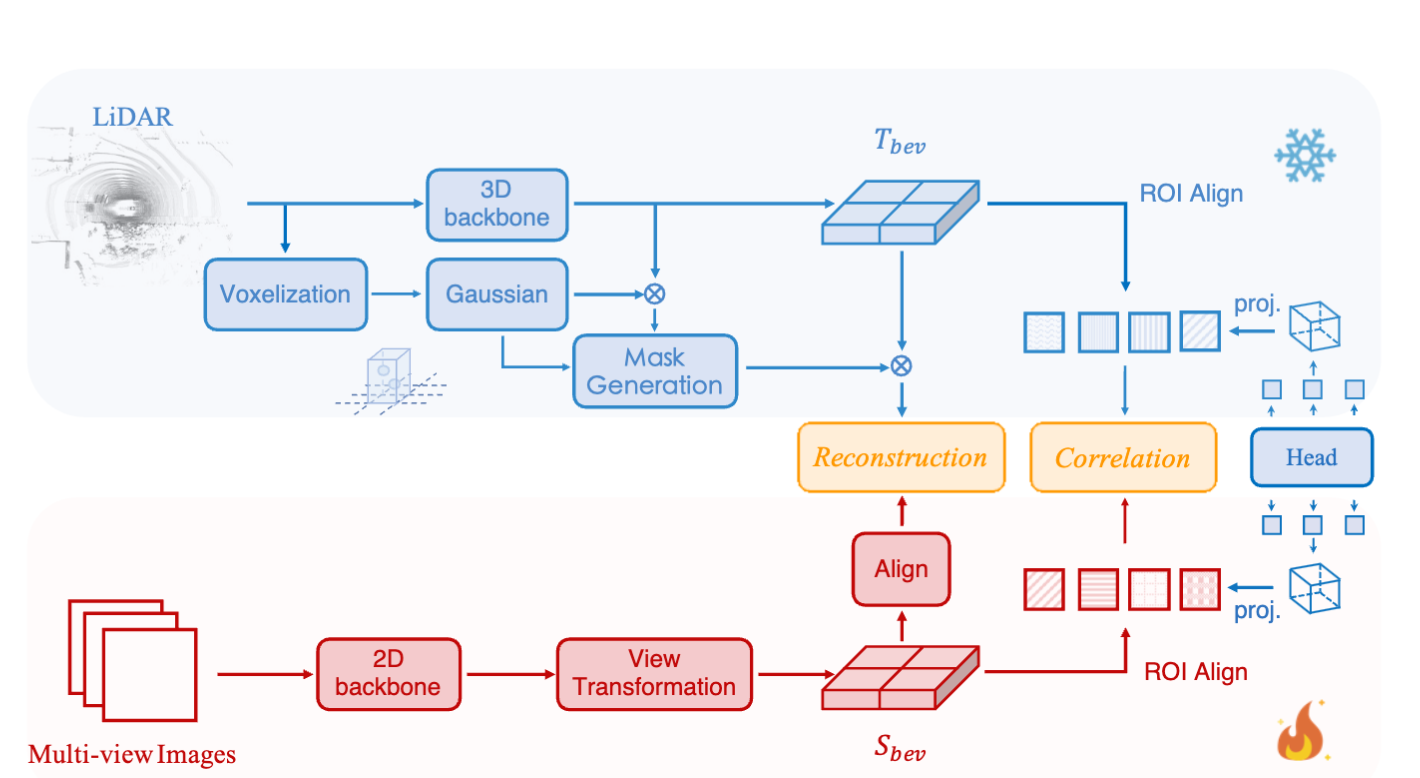

Geometric-aware Pretraining for Vision-centric 3D Object Detection

Linyan Huang, Huijie Wang, Jia Zeng, et al.

- We propose a geometric-aware pretraining method called GAPretrain, which distills geometric-rich information from LiDAR modality into camera-based 3D object detectors.

- arXiv

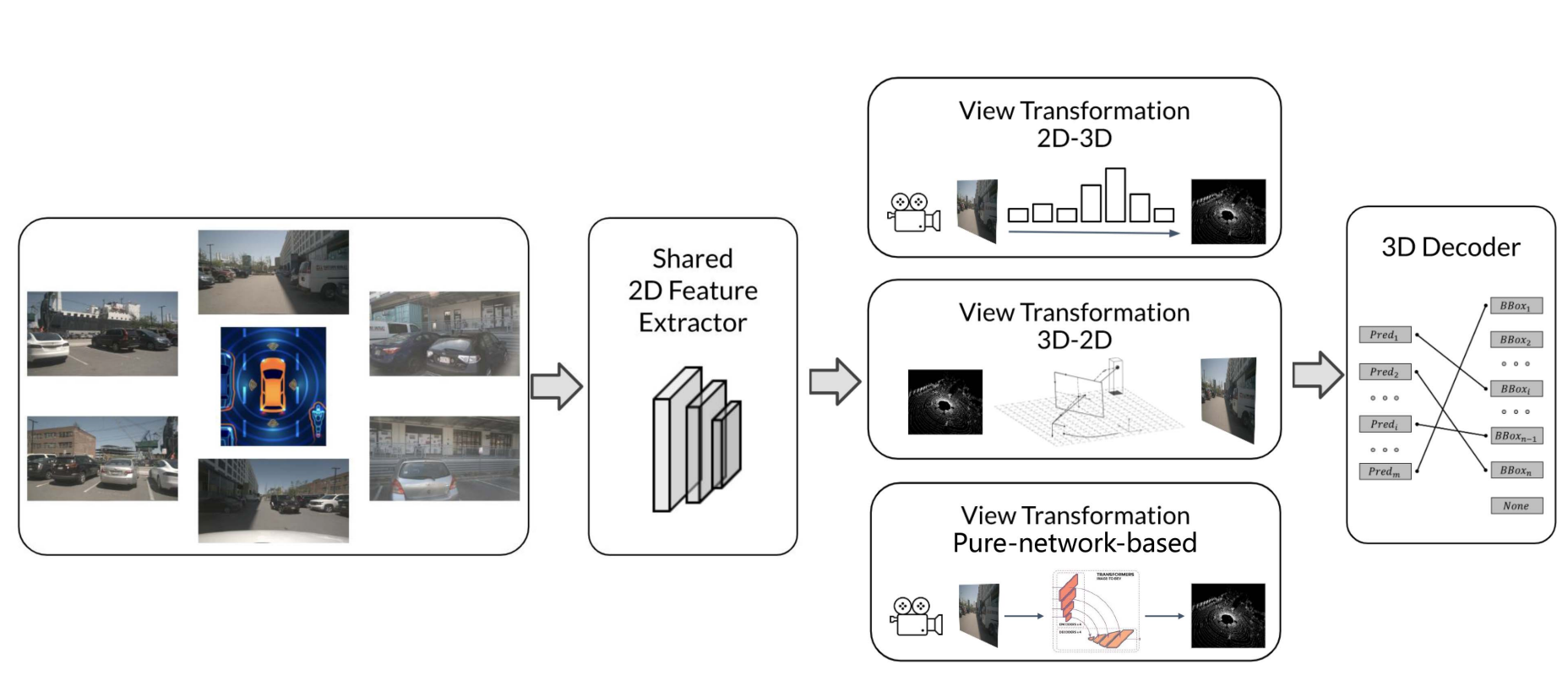

Delving Into the Devils of Bird’s-Eye-View Perception: A Review, Evaluation and Recipe

Hongyang Li$^\ast$, Chonghao Sima$^\ast$, Jifeng Dai$^\ast$, Wenhai Wang$^\ast$, Lewei Lu$^\ast$, Huijie Wang$^\ast$, Jia Zeng$^\ast$, Zhiqi Li$^\ast$, et al.

- we conduct a thorough review on Bird’s-Eye-View (BEV) perception in recent years and provide a practical recipe according to our analysis in BEV design pipeline.

- IEEE T-PAMI | Github

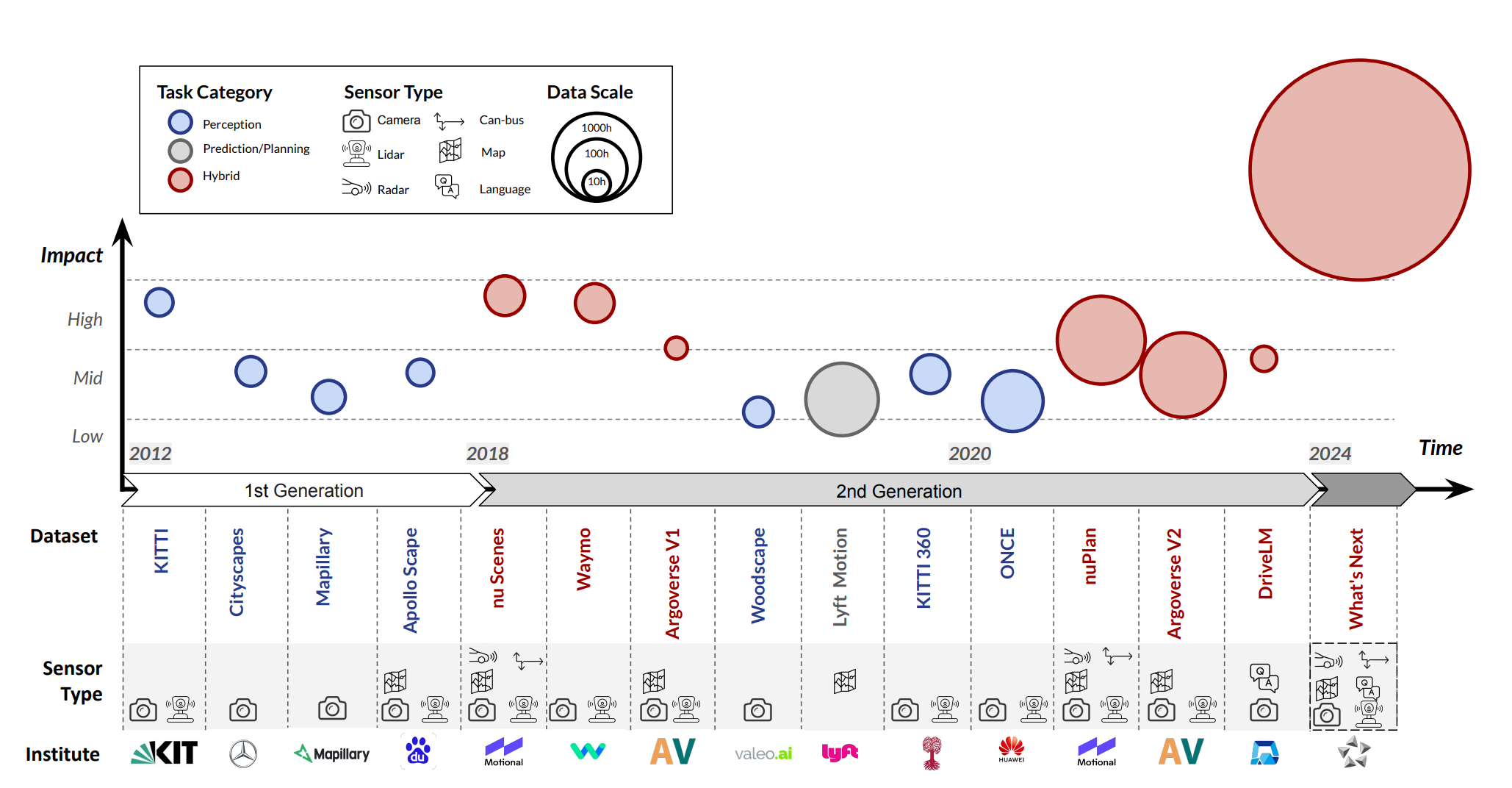

Open-sourced data ecosystem in autonomous driving: the present and future

Hongyang Li$^\ast$, Yang Li$^\ast$, Huijie Wang$^\ast$, Jia Zeng$^\ast$, Huilin Xu, et al.

- We undertakes an exhaustive analysis and discourse regarding the characteristics and data scales that future third-generation autonomous driving datasets should possess.

- SCIENTIA SINICA Informationis | arXiv

💪🏻 Bio-Mechatronics & Human-Machine Interaction

- Jia Zeng, Yu Zhou, et al. Fatigue-sensitivity comparison of sEMG and A-Mode ultrasound based hand gesture recognition, IEEE Journal of Biomedical and Health Informatics (JBHI), 2021.

- Jia Zeng, Yu Zhou, et al. Cross modality knowledge distillation between A-mode ultrasound and surface electromyography, IEEE Transactions on Instrumentation and Measurement (TIM), 2022.

- Jia Zeng, Yixuan Sheng, et al. Adaptive learning against muscle fatigue for A-mode ultrasound based gesture recognition, IEEE Transactions on Instrumentation and Measurement (TIM), 2023.

- Yu Zhou, Jia Zeng, et al. Voluntary and FES-induced finger movement estimation using muscle deformation features, IEEE Transactions on Industrial Electronics (TIE), 2019.

🧑💻 Career Experience

Shanghai AI Labotory, Researcher

2023.09 - (present).

- Embodied foundation model and generalizable robotic manipulation.

Shanghai AI Labotory, Research intern

2022.04 - 2023.06, Supervisor: Prof. Hongyang Li.

- Birds-Eye-View perception and Knowledge distillation for 3D object detection.

🎓 Education

Robotics Institute, Shanghai Jiao Tong University

2017.09 - 2023.8, Supervisor: Prof. Honghai Liu.

- Bio-signal based human motion recognition and human-machine interaction.

💼 Service

- Reviewer for

CVPR,ECCV,NeurIPS,ICLR,ICML, etc. - Member of CAAI-Embodied AI Committee.